Briefly, here's a description of the water system. There are two water tanks, one located at the ground level and the other at the roof deck of a two-storey house. Water from the mains fills the ground level tank (GT) via a solenoid valve whenever it's not full. The roof deck tank (RT) is normally filled by another solenoid valve, but when water pressure is insufficient to fill it within a predetermined amount of time then a pump kicks in to do the job. There are sensors in both tanks. Two types are employed. The primary sensors are simple stainless tubes of varying lengths to detect water at different levels of the tank. The other type of sensor is a float switch and is used to detect a full tank and tank overflow condition. The float has a magnet inside which closes a reed switch in the body of the assembly whenever it's buoyed up by water. For both tanks there are sensors for water levels of 25%, 50%, 75% and 100%. RT has an additional sensor to detect overflow condition (this is simply a float switch located above the 100% water level sensor).

Above is a stylized diagram and schematic of the sensor setup inside the roof deck tank. Pull up resistors (located in the control panel) mean that output is low when sensor is submerged in water.

The control panel is located adjacent to GT. The enclosure contains all of the electronics, actuator control circuitry (to switch the DC solenoid valves and 500-Watt AC pump), and power supply. It also has indicator LEDs arranged to mimic two bar graphs, indicating the water levels for each of the tank as well as LEDs for the status of the solenoid valves and pump. There are also LEDs for RT overflow condition and empty-tank condition for both GT and RT. A Sonalert buzzer provides the audible alarm.

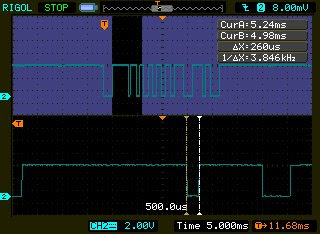

MCU is a PIC16F627A. Sensor outputs from both tanks are filtered by a simple RC passive low pass filter before being fed to a 74AC540 octal inverting buffer whose outputs then drive both the LEDs and the input pins of the MCU.

I'll skip details of the other parts of the firmware and simply discuss the sensor diagnostics part. If I recall correctly, years ago before upgrading the circuit up to an MCU-based system there had been at least one instance when one of the gauge-24 wires to the stainless steel sensors snapped off. Can't remember what havoc that wreaked but it was a fault condition nevertheless that eventually had to be repaired. The diagnostics algorithm that's been included in the firmware is based on the fact that depending on the water level, there are combinations of sensors that are "on", so to speak, which are valid and some which are not. And by "on" here we mean that the sensor is submerged in water. The diagnostic routine simply checks whether the current combination of sensors that are on is valid. If it is then the program just continues. If the combination is invalid then the diagnostics routine keeps looping back and checking the sensors until the fault is corrected.

For instance when the 100% water level probe is on then obviously all the other sensors must be on too. If any are not then a sensor fault condition exists. So, if the 25, 50, and 100% water level sensors all purport to detect water but the 75% sensor doesn't, something must be wrong.

And that was precisely the fault condition this morning. Which led to the system automatically shutting off all the valves and pump and switching on audible and visual alarms which in turn sent me into panic mode. Given that the 75% level indicator LED for the upper tank was off while the 100% level LED was on, I jumped to the conclusion that it must be a loose or corroded connection. And I then proceeded to check the 75% level wiring and wire connections. Turned out I was wrong. It was in fact the 100% level sensor that was on the blink. And it wasn't even an electrical fault. The sensor is a float switch and apparently the float had become stuck--just slightly. Since the water level at that time was below the 75% sensor, the float actually dropped on its own while I was checking the stainless steel sensors. Algae or light mud could've been the culprit.